# LLM Now Provides Tools for Working With Embeddings - simonwillison.net

Synced: [[2023_11_30]] 6:03 AM

Last Highlighted: [[2023_09_05]]

## Highlights

[[2023_09_06]] [View Highlight](https://read.readwise.io/read/01h9krsp8chyyhg9cy2wxx99ev) [[ai]]

> Things you can do with embeddings include:

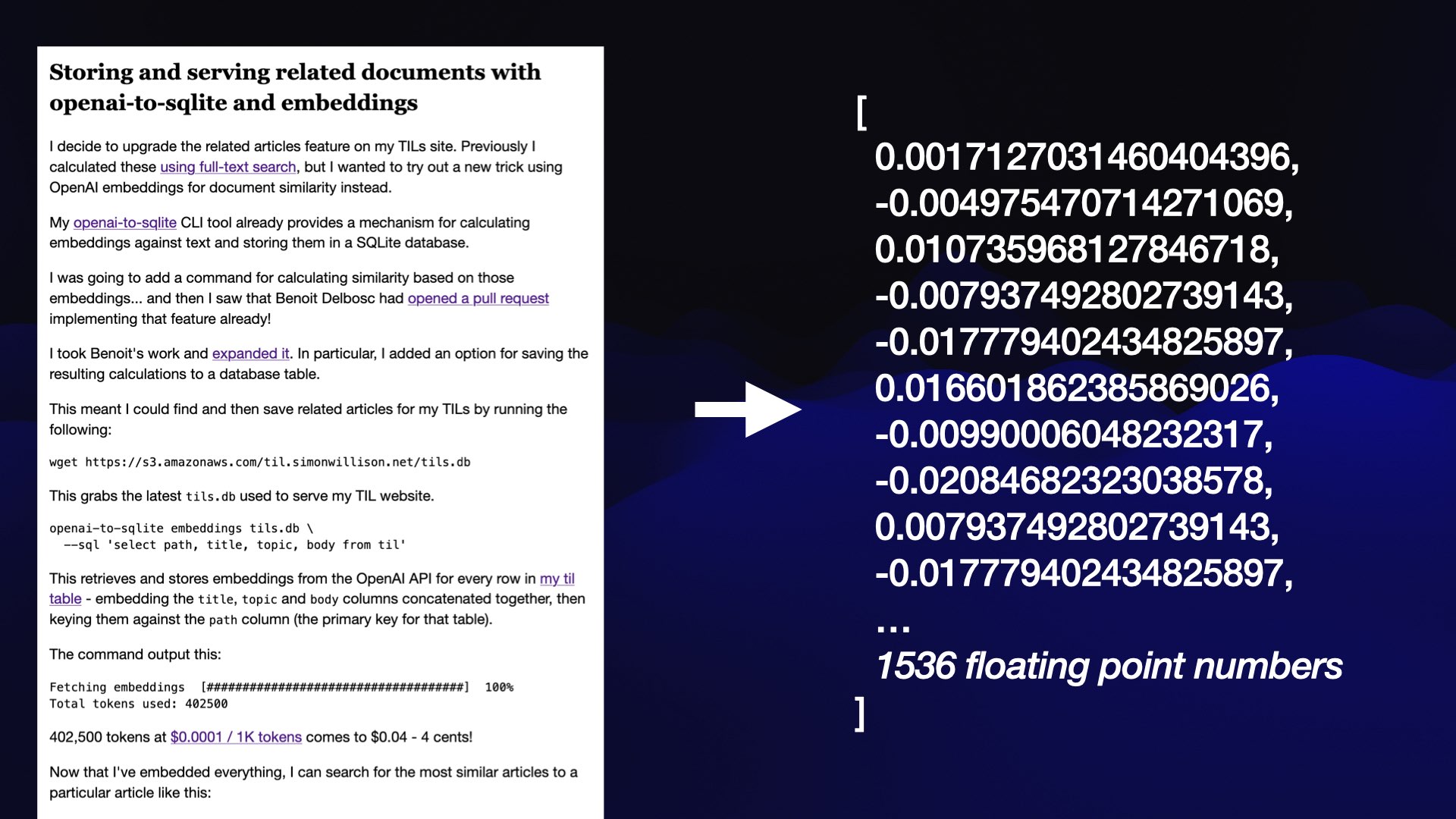

> 1. Find **related items**. I use this on [my TIL site](https://til.simonwillison.net/) to display related articles, as described in [Storing and serving related documents with openai-to-sqlite and embeddings](https://til.simonwillison.net/llms/openai-embeddings-related-content).

> 2. Build **semantic search**. As shown above, an embeddings-based search engine can find content relevant to the user’s search term even if none of the keywords match.

> 3. Implement **retrieval augmented generation**—the trick where you take a user’s question, find relevant documentation in your own corpus and use that to get an LLM to spit out an answer. More on that [here](https://simonwillison.net/2023/Aug/27/wordcamp-llms/#retrieval-augmented-generation).

> 4. **Clustering**: you can find clusters of nearby items and identify patterns in a corpus of documents

[[2023_09_06]] [View Highlight](https://read.readwise.io/read/01h9ksc4y4gcnf3ybnxedew7jf)

> Clustering with llm-cluster

> Another interesting application of embeddings is that you can use them to cluster content—identifying patterns in a corpus of documents.

This is super interesting I wonder if we can group documents automatically based on similarity? What about trying to cluster them around tag labels?